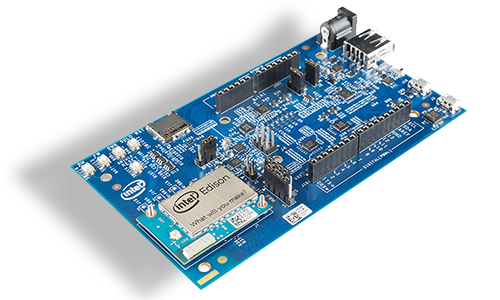

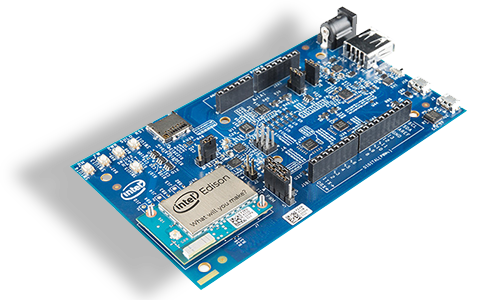

The Intel Edison is small, Wi-Fi and Bluetooth enabled development board running an Atom processor. It can be mounted on an Arduino-compatible board in order to interface with the wide variety of shields available.

I was interested in running Node.js and Johnny-Five – an open source framework originally created for robotics – on the Edison and connecting it to Azure IoT Hubs (Microsoft’s PaaS offering for the IoT).

Problem:

- The Azure IoT SDK requires Node.js 0.12 or greater.

- The Yocto project image (the officially supported Linux image for the Edison) only supports Node.js 0.10.

- Updating Node.js on Yocto is a non-trivial task.

Thanks to some ideas and help from Rex St. John and Rick Waldron, I was able to accomplish this.

Install ubiLinux on the Edison

ubilinux is a Debian variant and allows installation onto the internal flash of an Intel Edison platform.

Where the Yocto project is a custom configured Linux image that Intel has built to be lightweight, ubiLinux is a more full-featured Debian variant that, among other things, allows the installation of different versions of Node.js.

The installation instructions for ubiLinux are pretty straight forward.

ubilinux installation instructions for Intel® Edison

Using either PuTTy (Windows) or Terminal (Mac OS X), connect to the Edison setting the baud rate to 115200.

Example – Mac OS X w/ Terminal

screen /dev/cu.usbserial-######## 115200 -L

Replace ######## with the unique ID of your Edison (its easiest to type screen /dev/cu.usbs then press the tab key to auto-complete the device ID, then add 115200 -L to it.)

Press Enter twice and log in with the user name root and the password edison.

Once connected, set the time of on the OS to the current UTC time (you need to check and possibly set the time whenever the Edison is without power for any duration).

Check the current date in the terminal SSH session, by executing the following command:

date

If the date is incorrect, execute the following, replacing the date and time with the current UTC time.

date --s='23 FEB 2016 14:34:00'

Remember: You need to check (and possibly correct) the date any time the Edison has been without power.

Configure Wi-Fi

Use vi to edit the interfaces file (where the Wi-Fi connection information is maintained).

vi /etc/network/interfaces

Edit the following, changing Emutex and passphrase to your SSID and passcode respectively.

#auto wlan0

iface wlan0 inet dhcp

# For WPA

wpa-ssid Emutex

wpa-psk passphrase

Restart the networking service with the following command:

/etc/init.d/networking restart

After networking is restarted, ensure the Wi-Fi is running.

ifup wlan0

You should see output similar to this (note the IP address in the bound to line)

root@ubilinux:~# ifup wlan0

Internet Systems Consortium DHCP Client 4.2.2

Copyright 2004-2011 Internet Systems Consortium.

All rights reserved.

For info, please visit https://www.isc.org/software/dhcp/

Listening on LPF/wlan0/90:b6:86:0b:53:2d

Sending on LPF/wlan0/90:b6:86:0b:53:2d

Sending on Socket/fallback

DHCPREQUEST on wlan0 to 255.255.255.255 port 67

DHCPDISCOVER on wlan0 to 255.255.255.255 port 67 interval 5

DHCPREQUEST on wlan0 to 255.255.255.255 port 67

DHCPOFFER from 192.168.1.1

DHCPACK from 192.168.1.1

bound to 192.168.1.54 -- renewal in 39220 seconds.

root@ubilinux:~#

Update APT packages

From the same terminal session, enter the following command:

apt-get update

Install Node Version Manager

From the terminal session, clone the Node Version Manager (NVM) repo.

git clone git@github.com:creationix/nvm.git ~/.nvm && cd ~/.nvm && git checkout `git describe --abbrev=0 --tags`

Then activate NVM

. ~/.nvm/nvm.sh

Next, add these lines to your ~/.bashrc, ~/.profile, or ~/.zshrc file to have it automatically sourced upon login:

export NVM_DIR="$HOME/.nvm"

[ -s "$NVM_DIR/nvm.sh" ] && . "$NVM_DIR/nvm.sh" # This loads nvm

Install a Newer Version of Node.js

Use NVM to install whichever version of Node.js you prefer. For this example I am installing Node.js v4.3.1 (stable and mature), but you could install 0.12.7 or 5.0.0 or 5.6.0, or stable, etc..

From the terminal session, run the following command:

nvm install 4.3.1

This will install Node.js v4.3.1 and make it the current version (e.g. running node -v will tell you the current version).

Congratulations! The Edison is now set up with Node.js 4.3.1 and ready to run an app that leverages sensors and communicates with Azure IoT Hubs.

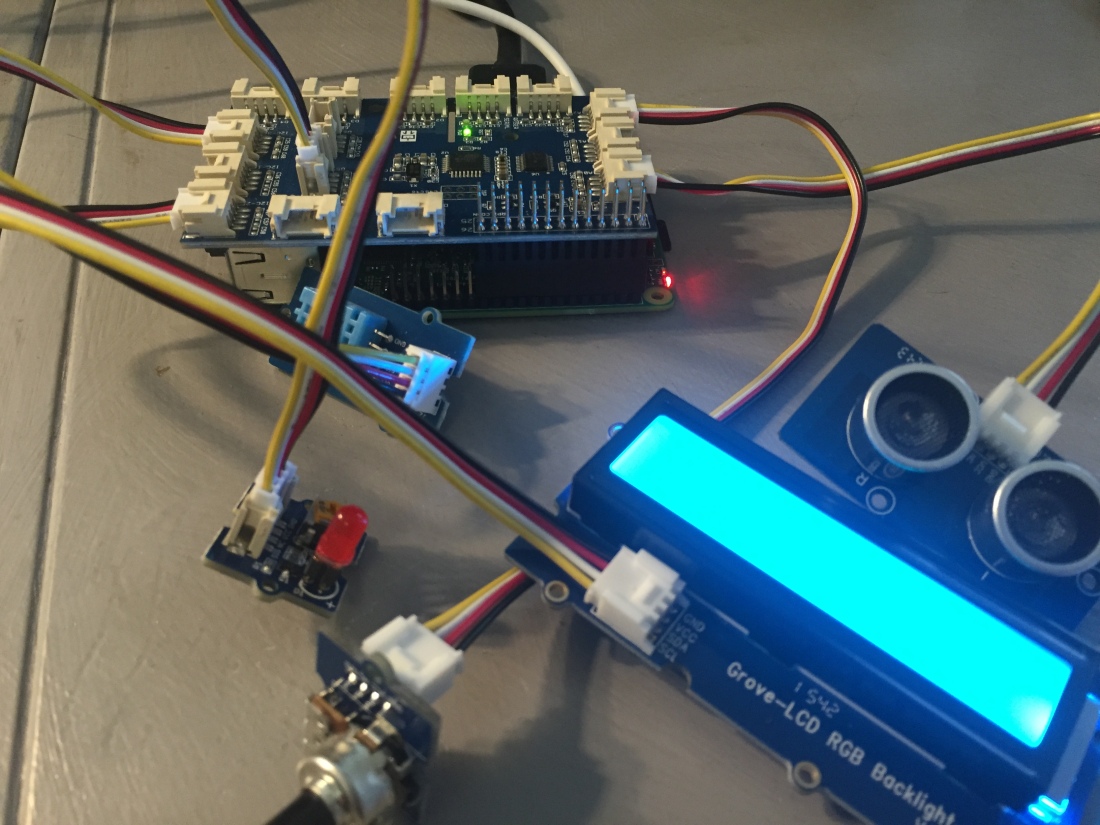

The IoT App

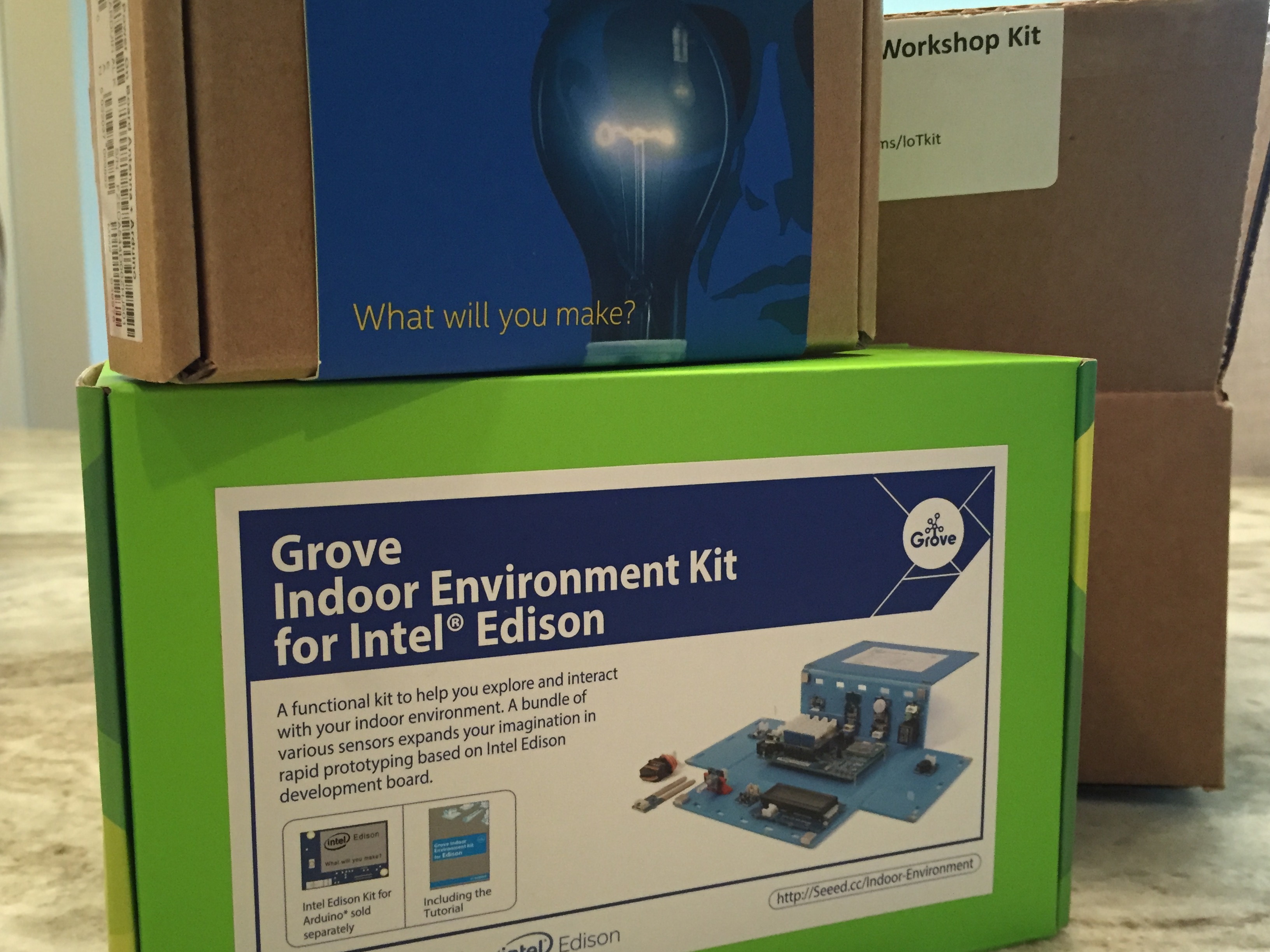

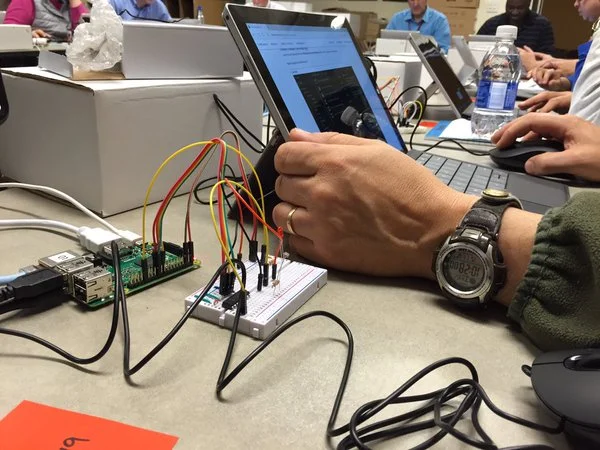

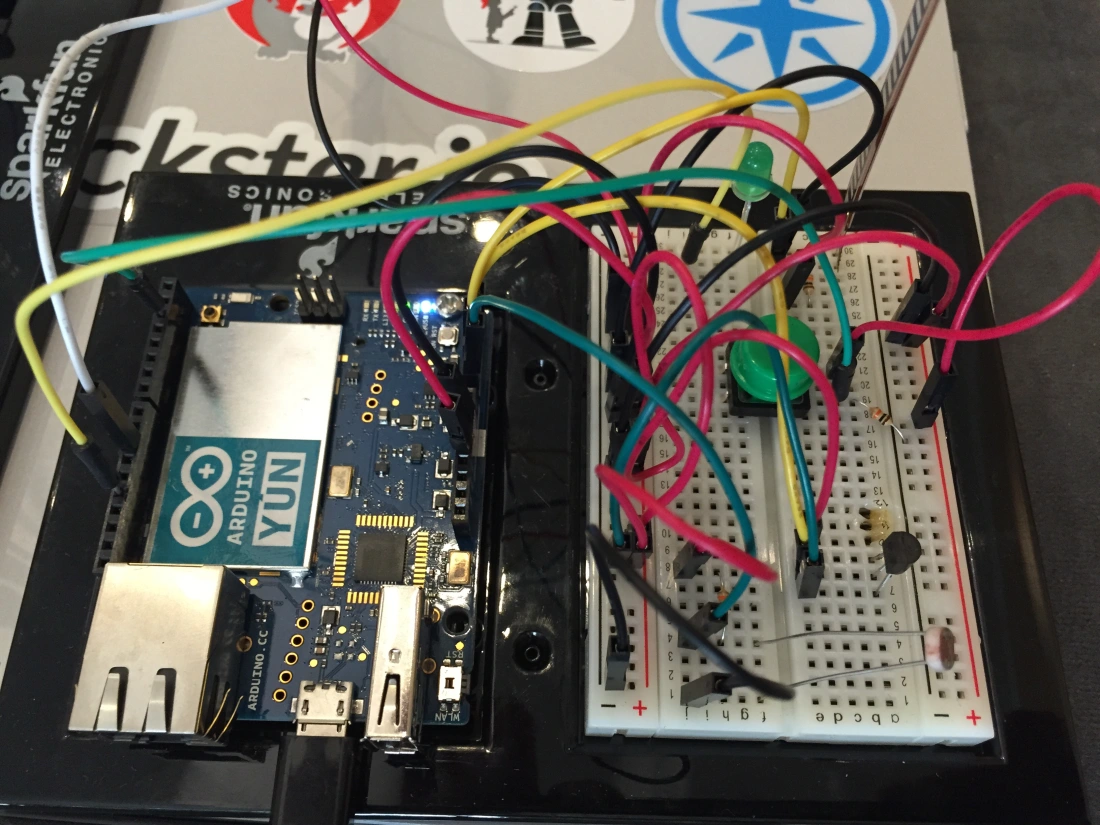

For this example I am using the Grove Stater Kit – Intel IoT Edition from Seeed Studios, although you could use something else if you’d like. The advantage of prototyping/experimenting with the Grove kits is that they eliminate the error-prone wiring and breadboarding and make connecting a variety of sensors to digital, analog and I2C pins easy. The Grove kit includes an Arduino compatible shield that a wide variety of sensors plug into.

The app I built uses the Grove kit, but you can easily modify it to use sensors on a breadboard – so continue reading even if you don’t have the Grove kit.

I am also making the assumption that you have set-up and Azure IoT Hub (if you haven’t, check out this lab)

Johnny-Five

Johnny-Five is a framework I have been using a lot because of its nice abstraction of the GPIO complexities and exposes objects for the development board and sensors. The board and input sensors have events that invoke callback functions making it easy to work in an event-driven way.

Define the Application Dependencies

Start by defining the application manifest, including the dependencies. In your working directory on you PC, create a file called package.json.

{

"name": "thinglabs-iot-sample",

"repository": {

"type": "git",

"url": "https://github.com/ThingLabsIo/IoTLabs/tree/master/Edison/AzureIoT"

},

"version": "0.1.2",

"private":true,

"description": "Sample app that connects a device to Azure using Node.js",

"main": "iot-starter-kit.js",

"author": "Doug Seven",

"license": "MIT",

"dependencies": {

"azure-iot-device": "1.0.1",

"azure-iot-device-amqp": "1.0.1",

"azure-iot-device-http": "1.0.1",

"azure-iot-device-mqtt": "1.0.1",

"johnny-five": "0.9.25",

"edison-io": "0.9.2"

}

}

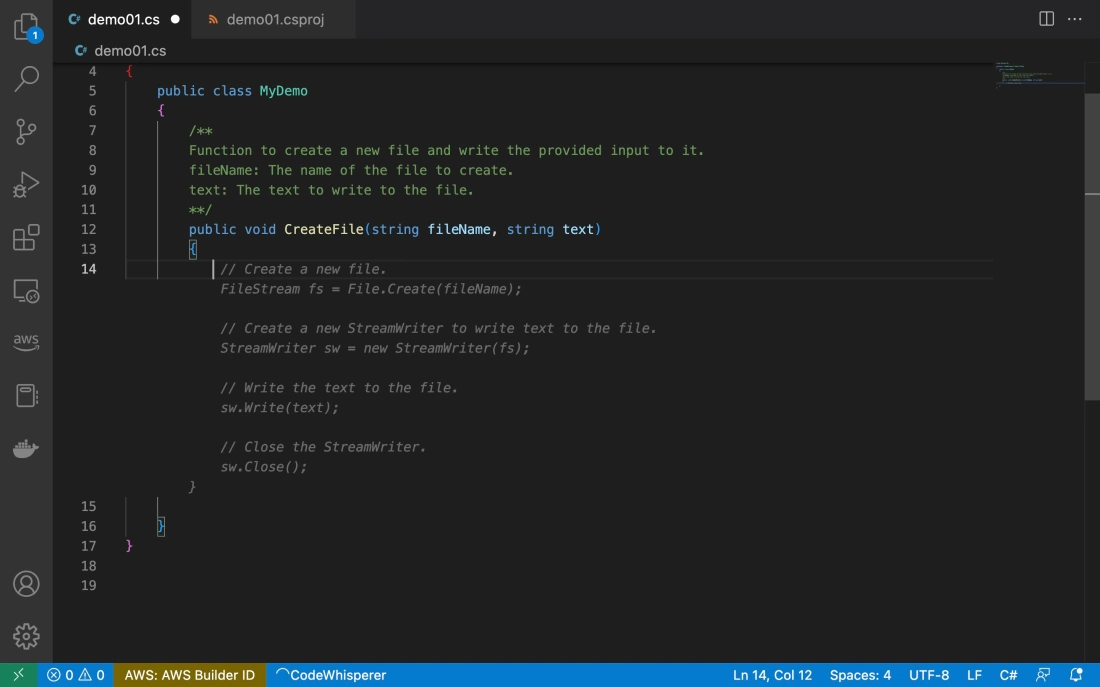

Write the Application

There are a few key concepts to cover in the application, so I have commented it verbosely. Create a new file named iot-starter-kit.js (named after the Grove kit) and add the following:

'use strict';

// Define the objects you will be working with

var five = require("johnny-five");

var Edison = require("edison-io");

var device = require('azure-iot-device');

// Define the client object that communicates with Azure IoT Hubs

var Client = require('azure-iot-device').Client;

// Define the message object that will define the message

// format going into Azure IoT Hubs

var Message = require('azure-iot-device').Message;

// Define the protocol that will be used to send messages

// to Azure IoT Hub

// For this lab we will use AMQP over Web Sockets.

// If you want to use a different protocol, comment out

// the protocol you want to replace,

// and uncomment one of the other transports.

// var Protocol = require('azure-iot-device-amqp-ws').AmqpWs;

var Protocol = require('azure-iot-device-amqp').Amqp;

// var Protocol = require('azure-iot-device-http').Http;

// var Protocol = require('azure-iot-device-mqtt').Mqtt;

// The device-specific connection string to your Azure IoT Hub

var connectionString = '[YOUR AZURE IOT DEVICE CONNECTION STRING]';

// Create the client instance that will manage the connection

// to your IoT Hub

// The client is created in the context of an Azure IoT device.

var client = Client.fromConnectionString(connectionString, Protocol);

// Extract the Azure IoT Hub device ID from the connection string

var deviceId = device.ConnectionString.parse(connectionString).DeviceId;

// location is simply a string that you can filter on later

var location = 'Home Office';

// Define the sensors you will use.

var thermometer, lcd, led;

// Define some variable for holding sensor values

// celsius, fahrenheit, red, green, blue

// and initialize them all to 0

var c, f, r, g, b = 0;

// Define the board, which is an abstraction of the Intel Edison

var board = new five.Board({

io: new Edison()

});

// Open the connection to Azure IoT Hub

// When the connection respondes (either open or error)

// the anonymous function is executed

var connectCallback = function (err) {

console.log("Azure IoT connection open...");

if(err) {

// If there is a connection error, show it

console.err('Could not connect: ' + err.message);

} else {

console.log('Client connected');

// Create a message and send it to the IoT Hub every five-seconds

var sendInterval = setInterval(function () {

sendMessage('temperature', c);

}, 5000);

client.on('message', function (msg) {

console.log('Id: ' + msg.messageId + ' Body: ' + msg.data);

var body = msg.data.split(":");

var indexOfLed = body.indexOf("led");

if(indexOfLed >= 0) {

if(body[indexOfLed+1] === "on"){

led.on();

} else if(body[indexOfLed+1] === "off"){

led.off();

}

}

client.complete(msg, printResultFor('completed'));

// reject and abandon follow the same pattern.

// /!\ reject and abandon are not available with MQTT

});

// If the client gets an error, handle it

client.on('error', function (err) {

console.error(err.message);

});

// If the client gets disconnected, cleanup and reconnect

client.on('disconnect', function () {

clearInterval(sendInterval);

client.removeAllListeners();

client.connect(connectCallback);

});

}

}

function sendMessage(src, val){

// Define the message body

var payload = JSON.stringify({

deviceId: deviceId,

location: location,

sensorType: src,

sensorValue: val

});

// Create the message based on the payload JSON

var message = new Message(payload);

// For debugging purposes, write out the message payload to the console

console.log("Sending message: " + message.getData());

// Send the message to Azure IoT Hub

client.sendEvent(message, printResultFor('send'));

}

// Helper function to print results in the console

function printResultFor(op) {

return function printResult(err, res) {

if (err) console.log(op + ' error: ' + err.toString());

if (res) console.log(op + ' status: ' + res.constructor.name);

};

}

// Linear Interpolation

// https://en.wikipedia.org/wiki/Linear_interpolation

function linear(start, end, step, steps) {

return (end - start) * step / steps + start;

}

// The board.on() executes the anonymous function when the

// board reports back that it is initialized and ready.

board.on("ready", function() {

console.log("Board connected...");

client.open(connectCallback);

// Plug the Temperature sensor module

// into the Grove Shield's A0 jack

thermometer = new five.Thermometer({

pin: "A0",

controller: "GROVE"

});

// Plug the LCD module into any of the

// Grove Shield's I2C jacks.

lcd = new five.LCD({

controller: "JHD1313M1"

});

// Plug the LED module into the Grove Shield's D6 jack

led = new five.Led(6);

// The thermometer object will invoke a callback everytime it reads data

// as fast as every 25ms or whatever the 'freq' argument is set to

thermometer.on("data", function() {

/*

* The LCD's background will change color according to the temperature.

* Hot -> Warm: Red -> Yellow

* Moderate: Green

* Cool -> Cold: Blue -> Violet

*/

// If there is no change in temp, do nothing.

if (f === Math.round(this.fahrenheit)) {

return;

}

f = Math.round(this.fahrenheit);

c = Math.round(this.celsius);

r = linear(0x00, 0xFF, f, 100);

g = linear(0x00, 0x00, f, 100);

b = linear(0xFF, 0x00, f, 100);

lcd.bgColor(r, g, b).cursor(0, 0).print("Fahrenheit: " + f);

});

});

Copy App Files to Edison

Using FileZilla you can connect to the Edison using sftp and drag-n-drop files onto the Edison.

- Launch FileZilla and connect to the Edison:

Host: The IP address of the Edison

Username: root

Password: edison

Port: 22

- Press Quickconnect

- Set the Local site path to the directory where your app files are located.

- Under the root directory on the Edison (Remote site), create a new directory named iot-labs.

- Drag the application files from the Local site directory to the Remote site directory.

Run the Application

From the terminal session, move to the iot-labs directory.

cd iot-labs

Use NPM to install the application dependencies.

npm install

Run the application

node iot-starter-kit.js

The application will launch and you will see some initial messages as the board initializes. After a few seconds you should see the temperature displayed on the LCD screen, and every five seconds you should see a messaged sent (‘enqueued’) to your Azure IoT hub.

root@ubilinux:~/iot-labs/azure-iot# node iot-starter-kit.js

1456213504410 Device(s) Intel Edison

1456213504440 Connected Intel Edison

1456213504469 Repl Initialized

>> Board connected...

Azure IoT connection open...

Client connected

Sending message: {"deviceId":"d7-edison","location":"Home Office","sensorType":"temperature","sensorValue":24}

send status: MessageEnqueued

Sending message: {"deviceId":"d7-edison","location":"Home Office","sensorType":"temperature","sensorValue":24}

send status: MessageEnqueued

You can monitor the messages coming into Azure IoT Hubs by using the iothub-explorer command line utility. In a new terminal window, install the iothub-explorer with NPM.

npm -g install iothub-explorer

Once installed, turn on the iothub-explorer event monitor (this will monitor messages as they come into your Azure IoT hub – so you know they are getting there).

iothub-explorer [YOUR IOT HUB OWNER CONNECTION STRING] monitor-events [YOUR DEVICE ID]

Note: On Mac OS X put the connection string in quotes. On Windows, do not use quotes).

You should see the same messages that your Edison is sending showing up in the monitor.

Event received:

{ deviceId: 'd7-edison',

location: 'Home Office',

sensorType: 'temperature',

sensorValue: 24 }

Sending Cloud to Device Messages

One of the capabilities I slipped into the application without much fanfare is the ability for the Edison to run an LED on and off based on messages sent to it via the Azure IoT hub. You can look at the application code for this block:

client.on('message', function (msg) {

console.log('Id: ' + msg.messageId + ' Body: ' + msg.data);

var body = msg.data.split(":");

var indexOfLed = body.indexOf("led");

if(indexOfLed >= 0) {

if(body[indexOfLed+1] === "on"){

led.on();

} else if(body[indexOfLed+1] === "off"){

led.off();

}

}

client.complete(msg, printResultFor('completed'));

// reject and abandon follow the same pattern.

// /!\ reject and abandon are not available with MQTT

});

This block listens for incoming messages over AMQP and evaluates them for the sting led in the body of the message. If led is found, and the very next item in the body (split on a semicolon) is the word on or off, then the LED state is changed respectively (this is a simple/crude example of how to do this).

You can send messages to the Edison from the iothub-explorer with the following command:

iothub-explorer [YOUR IOT HUB OWNER CONNECTION STRING] send [YOUR DEVICE ID] led:on

Try it a few times using led:on and led:off as the message body.

There you have it. You can now use Node.js on the Intel Edison to send Device-to-Cloud messages and receive Cloud-to-Device messages (which can be command messages).

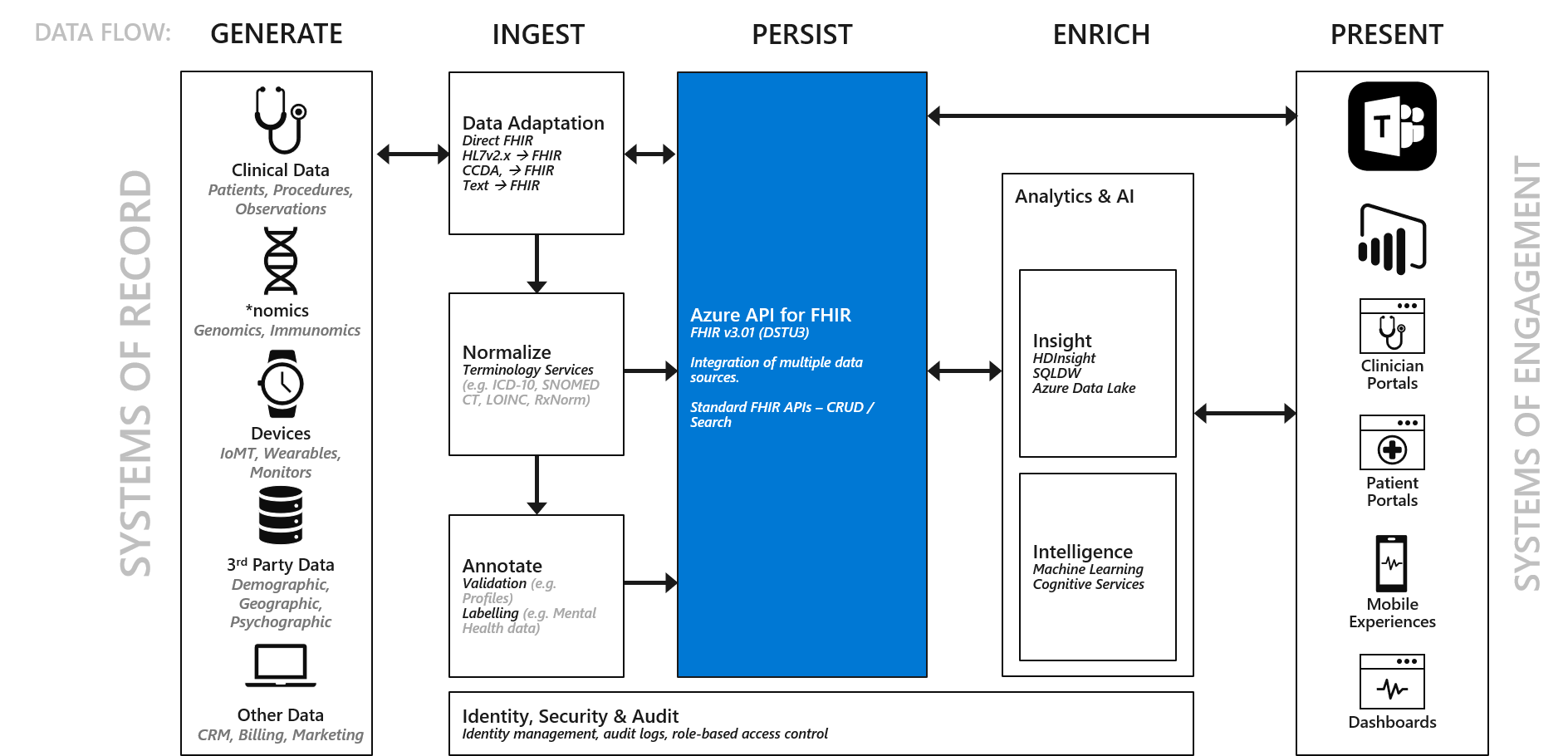

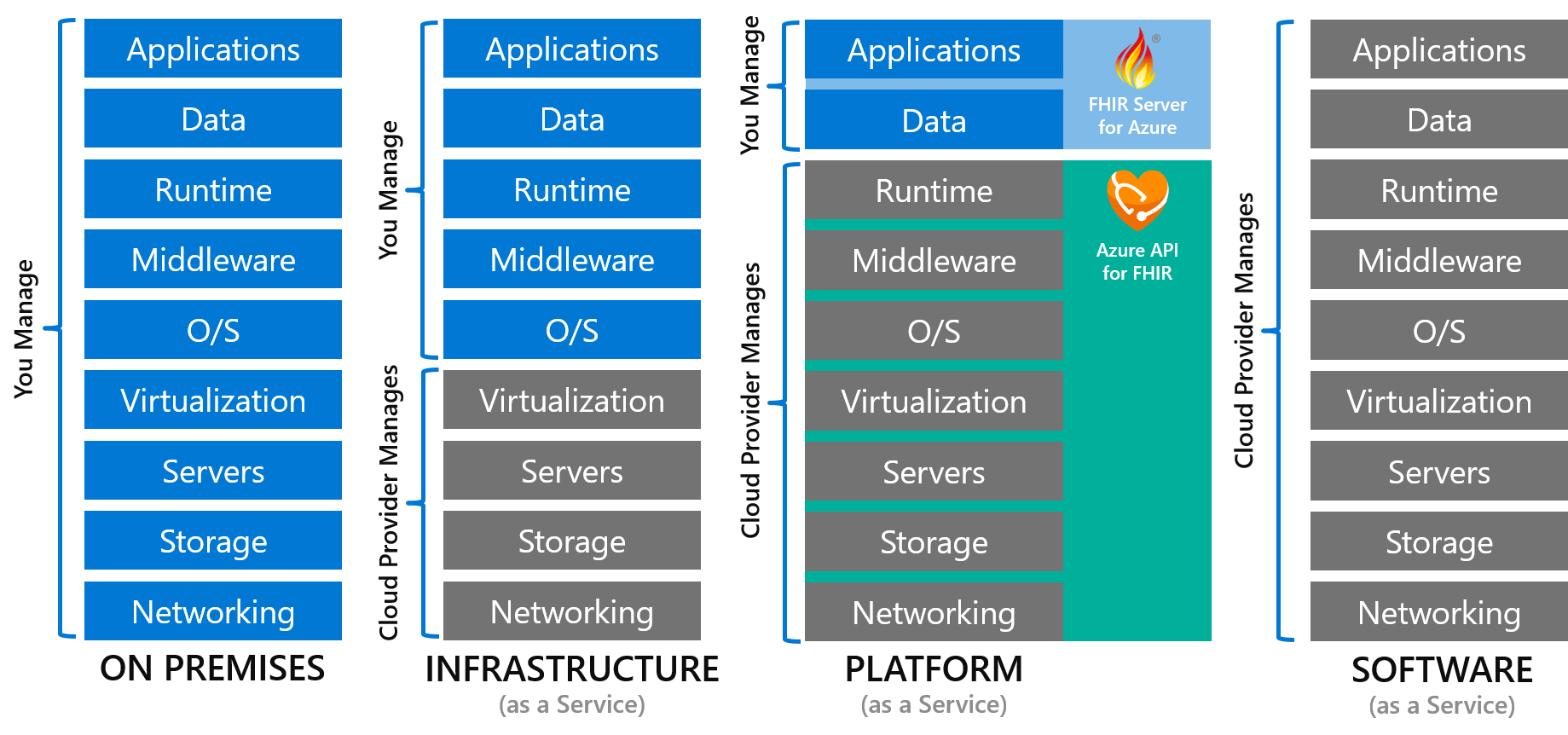

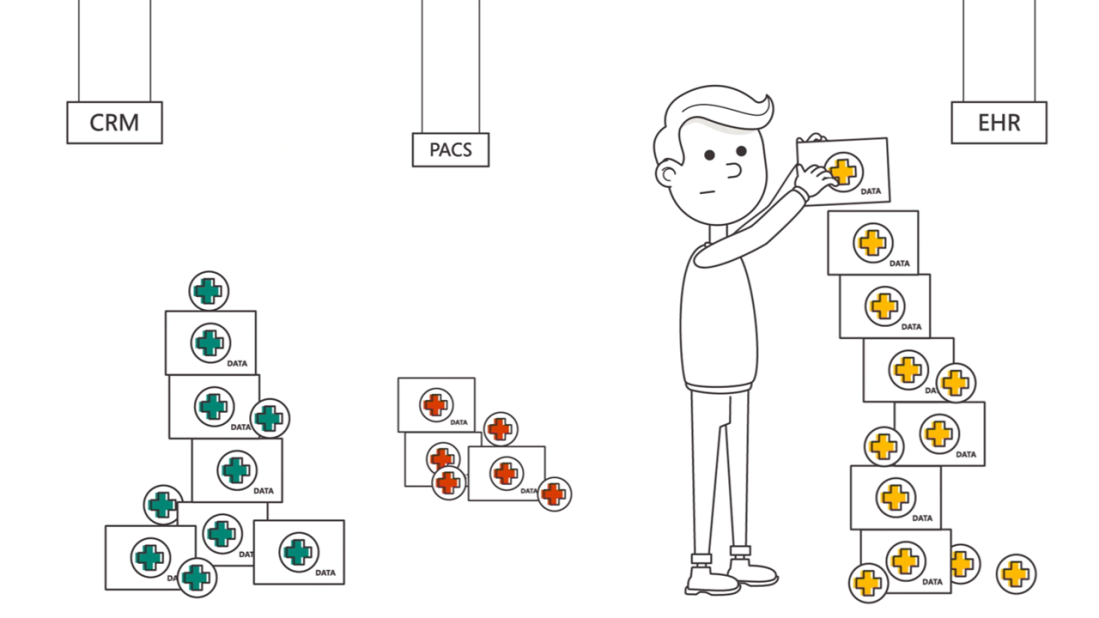

Microsoft is introducing a set of healthcare application programming interfaces (API) to enable modern healthcare solutions in the cloud. These APIs enable healthcare organizations to unlock the power of heath data so that systems can interoperate with each other and data can be used in new ways to drive better health outcomes more efficiently. Microsoft is adopting industry standard technical specifications like FHIR (Fast Healthcare Interoperability Resource ) to power this interoperability across multiple health systems and vendors. The first of our offerings, Azure API for FHIR, makes it easier for developers to leverage a single, consistent, secure, and authoritative ingestion and data management platform for extended health data and Protected Health Information (PHI) in the cloud, connecting multiple disparate systems. Azure API for FHIR will be followed by APIs for other health data standards like DICOM®, HL7v2 and more.

Microsoft is introducing a set of healthcare application programming interfaces (API) to enable modern healthcare solutions in the cloud. These APIs enable healthcare organizations to unlock the power of heath data so that systems can interoperate with each other and data can be used in new ways to drive better health outcomes more efficiently. Microsoft is adopting industry standard technical specifications like FHIR (Fast Healthcare Interoperability Resource ) to power this interoperability across multiple health systems and vendors. The first of our offerings, Azure API for FHIR, makes it easier for developers to leverage a single, consistent, secure, and authoritative ingestion and data management platform for extended health data and Protected Health Information (PHI) in the cloud, connecting multiple disparate systems. Azure API for FHIR will be followed by APIs for other health data standards like DICOM®, HL7v2 and more.