Technical Debt at Machine Speed

AI coding agents can generate a working REST API in 30 seconds. What they can’t tell you is whether you’ll regret it in 30 days.

I’ve spent the past three years building and deploying AI coding tools, first with Amazon Q Developer (formerly CodeWhisperer) and now enabling 14,000+ developers at Capital One. I’ve seen teams ship features 3-5x faster with AI agents. I’ve also seen their technical debt accumulate at speeds that would have been impossible in the pre-AI era.

The problem isn’t that AI writes bad code. The problem is that AI makes it trivially easy to generate working code, and impossibly hard to generate maintainable code.

Working code gets you through the next sprint. Maintainable code gets you through the next year. And right now, most teams are optimizing for the wrong one.

The AI Technical Debt Pyramid

Technical debt has always existed. What’s changed is the velocity at which it compounds. Here’s how it happens with AI-assisted development.

Level 1: Quick Wins (Week 1)

This is the honeymoon phase. Your team is shipping features faster than ever. The AI generates boilerplate, scaffolds services, writes tests. Velocity metrics are through the roof. Everyone’s excited.

The code works. It passes tests. It ships to production.

What you don’t see yet is that the AI made 47 micro-decisions about data structures, error handling, and abstraction patterns. You reviewed the logic, but you didn’t have time to review every choice. Nobody does at this speed.

Individual decisions look fine. The aggregate pattern doesn’t exist yet.

Level 2: Pattern Proliferation (Month 1)

Now you have six developers using AI agents. Each agent makes slightly different choices about how to structure similar problems. One uses classes. Another uses functional composition. A third mixes both because that’s what the AI suggested and it worked.

You’re not building inconsistent code on purpose. You’re just moving too fast to enforce consistency.

The AI doesn’t care that your team already has three different patterns for the same problem. It will happily generate a fourth because its context window only sees the immediate task, not your entire codebase’s architectural evolution.

Pattern proliferation isn’t a bug. It’s an emergent property of high-velocity, low-coordination development.

Level 3: Architectural Inconsistency (Quarter 1)

Three months in, your codebase has become a museum of approaches. Authentication is handled four different ways across twelve services. Error logging follows three competing philosophies. The abstractions that made sense in isolation don’t compose.

New features take longer because developers spend more time navigating inconsistency than writing code. The AI can’t help here because it can’t see the forest, only trees.

Code review becomes archaeology. “Why did we do it this way?” “I don’t know, the AI suggested it and it worked.”

This is where most teams realize something is wrong. But they’re already three months deep and the cost of refactoring is starting to look prohibitive.

Level 4: Compounding Complexity (Quarter 2+)

Now the real pain begins. Every new feature requires working around the inconsistencies from the previous quarter. Developers are spending more time debugging interactions between AI-generated code than building new capabilities.

The AI is still fast at generating individual components. But integrating those components into the existing system takes longer every sprint because the system itself has become harder to reason about.

You’re generating code at machine speed but understanding it at human speed. The gap between the two is your technical debt, and it’s compounding.

Welcome to the bottom of the pyramid. This is where “move fast” becomes “move carefully or break things we can’t easily fix.”

Optimization for the Wrong Metric

Here’s what I see happening in teams using AI coding agents.

They measure lines of code generated, features shipped, and time saved on implementation. All of those metrics go up. Leadership is happy. Developers feel productive.

But nobody is measuring whether the code being generated is building toward a coherent system or away from one.

AI agents optimize for making the immediate task work. They don’t optimize for consistency with the rest of your codebase, clarity for future maintainers, or architectural coherence across services.

That’s not a criticism of the AI. That’s just what the tool is good at. The problem is we’re treating AI-generated code like human-written code, which had natural speed limits that prevented this kind of runaway complexity.

When a human writes code, they can only generate so much before they need to step back and think about what they’ve built. The slowness was a feature, not a bug. It forced periodic reflection.

AI removes that speed limit. And if you don’t replace it with something else, you get the pyramid.

Building Brake Systems for High-Speed Development

The solution isn’t to stop using AI. The solution is to build the organizational equivalent of brake systems that let you develop at high speed while maintaining control.

Here are the brake systems that actually work.

Brake System 1: Spec-Driven Development

Before any AI agent writes a single line of code, write the specification. Not a vague user story. A technical spec that defines interfaces, data models, error handling, and how this component fits into the larger system.

The spec is the contract. The AI executes the contract. You review whether the execution matches the intent.

This sounds like waterfall, but it’s not. You’re not spending months on specs. You’re spending 30 minutes thinking through what you’re about to generate at machine speed. The ratio of planning time to implementation time has shifted dramatically, but the planning step is more important than ever.

Spec-driven development with AI is like being a film director. You’re not operating the camera, but you’re deciding what to shoot and why. The AI is your camera operator. Exceptional at execution, but it can’t tell you whether the scene makes sense in the larger story.

Brake System 2: Architectural Review Gates

Code review isn’t enough anymore. You need architectural review.

When an AI agent generates a feature, someone needs to ask: Does this follow our established patterns? Does it introduce a new abstraction we’re going to regret? Will this compose with the rest of the system?

These aren’t syntax questions. These are design questions. And they need to be asked before the code merges, not after you’ve shipped six variants of the same pattern.

Set up review gates that specifically look at AI-generated code for architectural consistency. Treat AI output like you would code from a junior developer who’s exceptionally fast but doesn’t yet understand your system’s design philosophy.

Brake System 3: The Consistency Tax

Every time you let the AI introduce a new pattern, you’re taking on debt. Sometimes that debt is worth it. Sometimes it’s not.

Make it explicit. When reviewing AI-generated code, ask: “Is this pattern already in our codebase? If not, is introducing a new pattern worth the long-term cost?”

If the answer is no, send it back. Make the AI use the existing pattern. Yes, this slows you down slightly. That’s the point. The consistency tax is what prevents pattern proliferation.

Think of it like maintaining a style guide, except instead of formatting rules, you’re maintaining architectural patterns. The AI can generate any pattern. Your job is to constrain which patterns are allowed.

Brake System 4: Periodic Refactoring Sprints

You can’t prevent all technical debt. You can only manage how fast it accumulates.

Schedule time every quarter to refactor AI-generated code that worked but didn’t age well. Look for patterns that proliferated, abstractions that didn’t compose, and decisions that made sense in isolation but don’t make sense in aggregate.

This isn’t wasted time. This is the cost of developing at AI speeds. You’re essentially compressing a year’s worth of technical debt into a quarter, which means you need to compress a year’s worth of cleanup into scheduled refactoring time.

Teams that skip this step end up with the compounding complexity problem. Teams that embrace it maintain velocity over the long term.

Brake System 5: AI Context Engineering

The better your AI understands your existing system, the less likely it is to generate inconsistent code.

This means investing in tooling that gives the AI broader context. That might be semantic code search, architectural documentation the AI can reference, or examples of preferred patterns that get injected into prompts.

Some teams are building “style guides for AI” that define not just coding standards but architectural patterns the AI should follow. When the AI generates code, it’s measured against those patterns before it gets reviewed by humans.

This is expensive upfront. But it prevents the pattern proliferation problem at the source.

AI Doesn’t Make Technical Debt Optional

A lot of teams are treating AI coding agents like they’ve solved the productivity problem. Ship faster, ship more, ship now.

What they’re actually doing is borrowing against the future at rates they don’t yet understand.

AI doesn’t eliminate technical debt. It just moves the debt from the writing phase to the review and maintenance phases. And if you’re not prepared for that shift, you’re going to hit a wall around month three when the compounding complexity catches up.

The teams that are succeeding with AI coding agents aren’t the ones generating the most code. They’re the ones who figured out how to slow down in the right places so they can stay fast in aggregate.

Spec first. Review for architecture, not just correctness. Enforce consistency even when the AI suggests something that works. Schedule refactoring as part of your velocity, not as a luxury when you have time.

These aren’t optional. These are the brake systems that let you develop at machine speed without crashing into technical debt you can’t recover from.

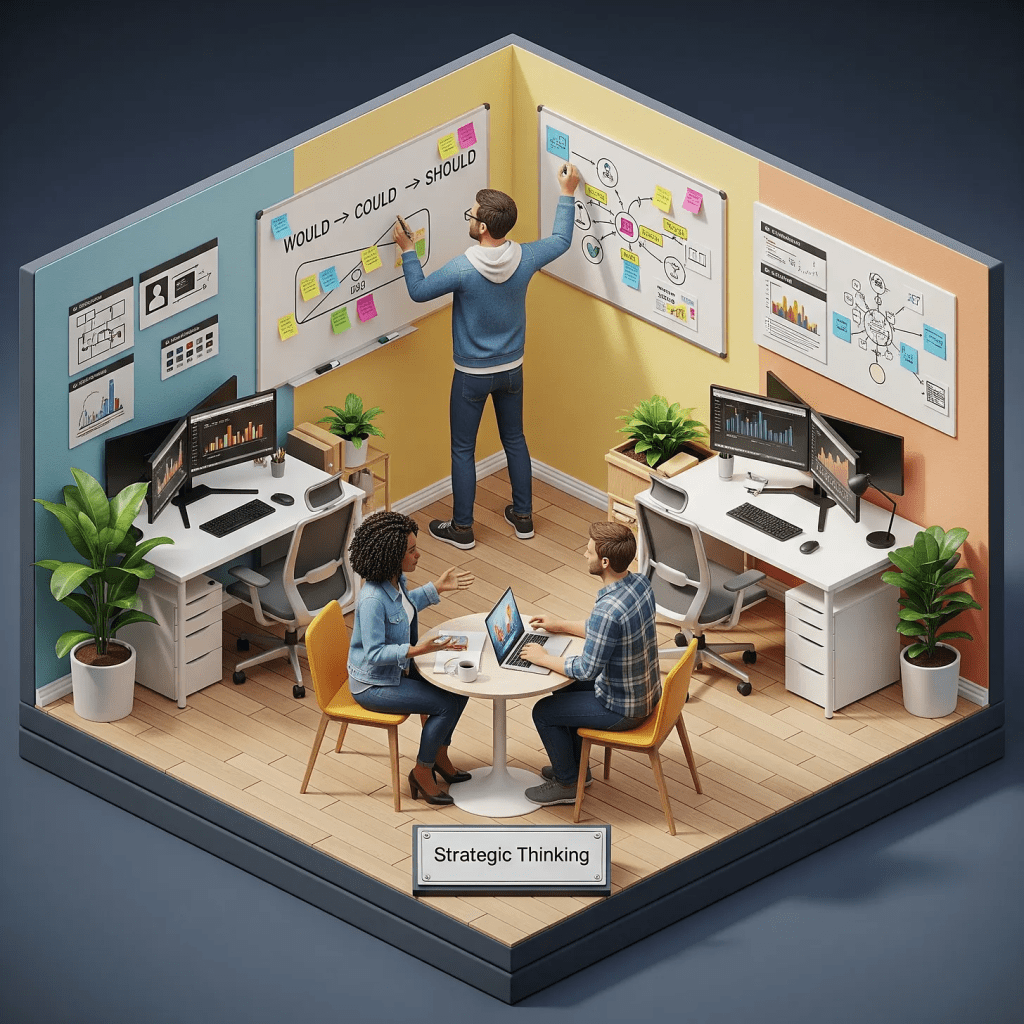

Knowing When to Slow Down

The most underrated skill in AI-assisted development isn’t prompt engineering or code review. It’s judgment about when to slow down.

When to write a spec instead of letting the AI run. When to refactor instead of shipping. When to enforce a pattern instead of accepting what works. When to say “this is fast but it’s not right.”

AI agents will keep getting faster. The code they generate will keep getting better. But they’re never going to understand your system the way you do. They’re never going to know which shortcuts will cost you later.

That’s still your job. And it’s more important now than it’s ever been.

The hidden cost of AI code isn’t the code itself. It’s the discipline required to use it well.

What brake systems is your team using to manage AI-generated technical debt? I’m genuinely curious what’s working.

Leave a comment